AI Tech Stack: A Simple Breakdown

Discussions about the AI tech stack often sound more complex than they really are. In reality, it's just the structure that helps data flow smoothly through an AI system.

Each layer has a part in turning disorganized input into working intelligence. Without that structure, even advanced tools struggle to deliver consistent results.

The guide ahead explains how every layer connects, what matters most when setting up your stack, and how you can skip the usual setup problems and start faster.

What Is an AI Technology Stack?#

An AI tech stack is the full setup that makes artificial intelligence work. It includes tools, apps, and storage systems that help computers learn from data and make smart choices. Each layer in the stack does one job, and together they turn information into something useful.

The process starts with collecting raw data. That can come from websites, apps, or sensors. The data then gets cleaned through data processing tools so it's ready for use.

Once it's cleaned, you keep it in a safe place like a data mart or a bigger data warehouse. Some compare a data warehouse vs a marketing database to see which one fits their needs better.

After that, data scientists and engineers use AI frameworks to train machine learning models. These models power real AI applications that help in business operations such as chatbots, recommendations, or support systems.

Core Layers of the AI Tech Stack#

An AI tech stack has several key components that keep everything running smoothly. Each layer handles a specific job, from managing data to training models with AI frameworks that bring ideas to life.

1. Data Layer#

The data layer is the base of the whole AI tech stack. Every system starts here because, without good data, nothing else works.

Your data can be anything, such as text, numbers, pictures, or sound, and it usually comes from many sources, like websites, apps, or sensors. That mix of structured and unstructured data needs to be organized so computers can actually read it.

After gathering, the next step is cleaning. You remove duplicates, fix errors, and fill in missing details. Others also use encoding categorical variables into numerical formats so computers can understand categories like "red," "blue," or "green."

Once cleaned, the data is stored in systems such as data lakes, data marts, or data warehouses, depending on the goal. These storage systems make sure information is ready when needed.

2. Model Layer#

The model layer is where the learning starts. After the data is ready, data scientists and AI engineers use it to teach computers how to recognize patterns. They choose a machine learning algorithm that fits the task.

Some use traditional machine learning algorithms, such as regression to predict numerical values, classification to sort items, or clustering to group similar items.

It further uses special tools called AI frameworks, like TensorFlow or PyTorch, to train machine learning models. The model keeps checking its guesses against the correct answers in the training data, then adjusts itself each time to get better.

The final version gets sent to the application layer, where it starts working in real apps like chatbots, image filters, or voice assistants.

3. Deployment Layer#

The deployment layer is where an AI model finally gets to do its actual job. Up to this point, the model has only been tested in a safe space.

Here, it moves into real use, often inside apps, websites, or business systems. It connects the model's predictions to everyday work, like helping a chatbot respond to customers or spotting patterns in sales data.

Developers often use Docker to pack everything the model needs into one bundle so it runs the same way everywhere. Systems like Kubernetes manage these bundles, so they remain active even as traffic grows.

4. Monitoring and Optimization Layer#

The monitoring and optimization layer is like the health check system for AI. After deployment, it watches everything the model does to make sure it stays accurate.

It also monitors model drift, which occurs when model performance declines due to changes in the data.

For instance, if a model was trained on older sales trends, it might give weak predictions once new patterns appear. When that happens, you collect new data and retrain the model so it learns the new trends.

The data gathered here goes back to the earlier layers, improving the next version of the model. It's a cycle of feedback and fixes that keeps the AI stack improving.

How AI Tech Stack Parts Work Together#

All parts of the AI tech stack depend on the one before it. Data starts the process, then moves through several stages until it becomes part of a working tool or product. Every layer in the AI layer has its own job, but it connects tightly with the next.

The process begins with data ingestion, where the system collects raw data from websites, sensors, or apps. That data moves into the data layer, where it gets cleaned and organized.

The infrastructure layer takes over to provide the storage and computing strength needed for big data processing. It also gives access to CPUs, GPUs, and TPUs that make heavy calculations possible.

Once the data is ready, it flows into the model layer, where developers train and test models. These models are then passed to the deployment layer, where they become real AI applications people can use.

The monitoring layer watches everything after launch, checking accuracy and collecting feedback.

All these AI tech stack components work together to form a cycle that never stops learning, improving, and producing smarter systems over time.

Why the AI Tech Stack Matters#

An AI tech stack connects the tools that handle data, train models, and deliver real results. Without it, your projects would take longer, cost more, and fail to adapt as they grow.

A well-built stack keeps the AI lifecycle organized from data collection to deployment and monitoring. Besides that, it:

- Supports smoother development and deployment because teams have connected tools and clear workflows that prevent delays and errors.

- Keeps AI projects scalable since the system can handle larger datasets and more complex models without breaking existing parts.

- Helps maintain flexibility by letting teams upgrade one tool or service at a time.

- Drives better decision-making since models can process data faster and give insights that improve business planning.

- Increases efficiency by automating manual tasks like report creation or customer service responses.

- Improves operational performance by allowing AI to work continuously with fewer system interruptions.

- Strengthens teamwork since everyone involved, from software engineering to management, understands how the stack connects.

- Protects sensitive data through encryption and access control, which reduces the risk of leaks.

- Maximizes AI investments by delivering faster and more reliable returns on every project.

AI Software and Technologies in a Modern Tech Stack#

These are the common AI solutions and technologies in a modern technology stack:

Data and ETL#

The data and ETL layer prepares information for AI. It collects, cleans, and organizes everything before it's used for AI model training. The process covers all parts of data management, from where data comes from to where it's stored.

ETL stands for extract, transform, and load.

"Extract" pulls data from different sources like websites, sensors, and files. "Transform," on the other hand, means cleaning it, fixing errors, and shaping it into usable forms. "Load" then moves the ready data into systems like a warehouse or lake, where it can be used later.

Data often comes in two types: structured data and unstructured data. Both need special handling to make sense to computers.

During transformation, engineers perform feature engineering, which means creating the most useful inputs for the AI to learn from. Once prepared, this clean data goes into storage systems that are ready for analysis and training.

Without it, even the smartest algorithms wouldn't work well because they'd be learning from messy or incomplete information.

Modeling#

The modeling layer is the part of the stack that turns data into intelligence. Data scientists use machine learning frameworks to build and train models.

Everything in this layer depends on the data prepared earlier. Afterwards, clean information becomes training data that the model uses to find patterns. Each test helps it learn what's right or wrong.

Once trained, the model is checked to make sure it performs well, then saved for later use. Cloud platforms such as Google Cloud make this process faster because they provide the computing power needed to train even a large language model.

Storage and Databases#

The storage and databases part of the AI tech stack is where everything is kept safe and organized. It handles huge amounts of information and makes sure it's easy to access when needed. Every type of data, structured, semi-structured, and unstructured, has its place here.

Different systems are used for different needs. A data lake stores large sets of raw and unstructured information, while a data warehouse keeps cleaned and organized data that's ready for analysis.

Databases like MySQL or PostgreSQL manage day-to-day records. Some systems, called feature stores, provide precomputed inputs for AI models to ensure consistency between testing and deployment.

For newer AI applications, a vector database is often added. It stores information as numerical vectors so AI can quickly find related items, which is important for search tools and chatbots.

Together, these storage tools form the base for data storage in any modern AI setup.

Monitoring Tools#

Monitoring platforms keep watch over everything after deployment to make sure models keep performing well and don't drift away from their original accuracy.

This software tracks how much computing power is being used, how clean the input data is, and if the predictions still make sense.

When something changes, such as when the results stop matching real outcomes, the system sends an alert. Engineers then look at the logs, check the statistics, and retrain the model if needed.

Platforms like Prometheus, Grafana, or ELK Stack show these metrics in real time through visual dashboards. Other tools, such as Arize AI or Fiddler, go deeper by checking for fairness and accuracy.

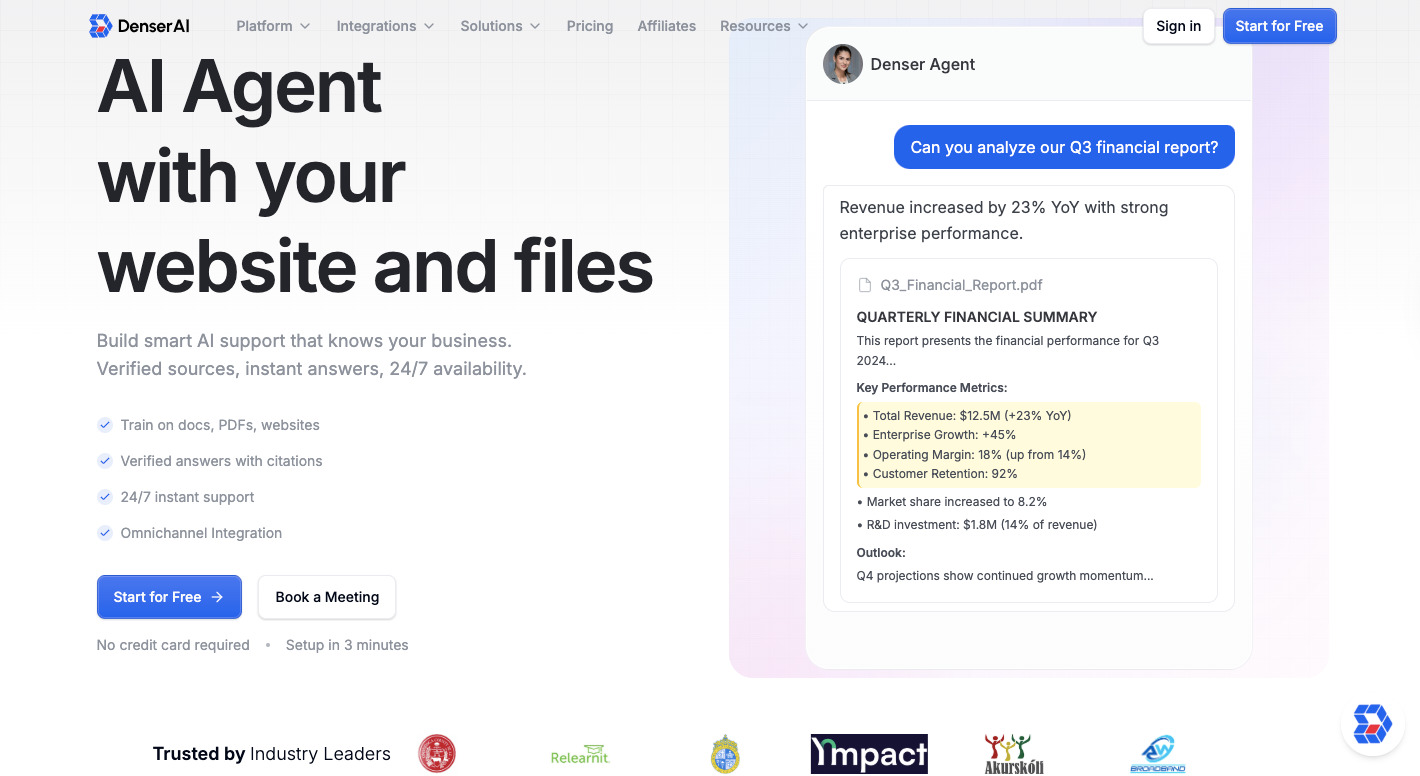

Simplify AI Stack Management With Denser#

Denser makes handling the modern AI stack simple for any business. It's a no-code platform that builds smart chatbots using your own documents, web pages, or files. These chatbots can handle customer questions, generate leads, and share accurate information in seconds.

Everything runs on retrieval-augmented generation (RAG), combined with natural language processing, so the AI understands the context of each question rather than just matching keywords. So you always get fact-based answers every time.

Setting up Denser takes only a few steps. You upload your data, and the platform automatically scans, organizes, and indexes it to build a searchable knowledge base.

When you ask questions, Denser finds the most relevant content, forms a natural response, and even shows the source it used.

It further uses standard data security measures like encryption to protect information while it's stored or shared.

Why Denser Is the Future of AI Infrastructure#

Denser gives you the ability to use generative AI solutions without coding or complex setup. The platform fits directly into daily tools and workflows, providing AI capabilities that help you work smarter and respond faster.

The user interface makes AI interactions feel natural, and its seamless integration connects easily with apps like WordPress, Slack, and Shopify, enabling teams to launch AI tools that work right away.

You also get:

Simplified Data Layer Management#

Denser simplifies how data is handled. It automatically scans and organizes documents, web pages, and files to create a searchable knowledge base.

Using RAG technology, it finds the most relevant information before generating an answer. Each response includes a source link, which helps users confirm the accuracy of the information.

Real-Time Data Access With Database Tools#

Denser connects directly to your database to give real-time answers. When you ask a question, the system translates it into a database query, runs it securely, and replies in plain language.

For example, if you ask, "What's the balance on account 1023?" Denser reads your database and replies instantly with the current data. It supports systems like MySQL, PostgreSQL, Oracle, and SQL Server, using encrypted connections for safety.

Advanced Modeling and Deployment#

Denser uses machine learning to analyze user questions and improve over time. The platform relies on structured data and context to refine how its AI responds. Its no-code setup means models are deployed instantly and integrated with your existing systems.

Monitoring and Optimization#

Built-in tracking tools record every interaction, showing what works and what needs improvement. Users can review chat logs, performance reports, and usage data to fine-tune results. Over time, Denser learns from every question to become more accurate and efficient.

Book a demo today and see how Denser connects every layer of your AI tech stack without the hassle of managing multiple tools!

Construct Your Complete AI Space—Use Denser!#

Denser fits perfectly into the idea of a full modern AI tech stack by taking everything that makes AI work: data, models, and deployment.

It handles the hardest parts of setup and automation so you can focus on using AI, not building it. Every chatbot built with Denser runs on retrieval-augmented generation, a process that finds accurate answers from your own data and then generates clear responses.

Combined with natural language processing, it understands the meaning behind questions, not just the words. That's how it gives human-like answers.

Denser also makes AI deployment simple. Once your chatbot is trained, it can go live on your website, app, or internal system within minutes.

In a complex AI development landscape, Denser stands out by turning deep technical work into practical, deployable AI solutions that bridge the gap between development and real-world impact.

Try Denser free or book a live demo to see how we outpace legacy AI systems!

FAQs About AI Tech Stack#

What are the four types of AI technology?#

The four types of AI technology:

- Reactive machines perform specific tasks without learning from past experiences.

- Limited-memory AI can analyze historical data to make more accurate predictions.

- Theory-of-mind AI focuses on understanding emotions and human intentions, which helps create more natural communication.

- Self-aware AI, which still exists only in theory, would have consciousness and the ability to make independent decisions.

Each type contributes differently to optimal performance in AI systems, depending on how it's trained and deployed by engineers using either open or closed source models.

What is the 30% rule in AI?#

The 30% rule in AI refers to the idea that automation should handle up to 30% of an employee's workload. It's not meant to replace humans but to make their jobs easier by automating repetitive or data-heavy tasks.

What is the most popular AI stack?#

The most popular AI stack includes tools like TensorFlow, PyTorch, and cloud services such as AWS, Azure, and Google Cloud. These tools form the foundation of most AI projects because they cover everything from data processing to model deployment.

Which is the best AI stock to buy?#

As of now, the best AI stocks to buy are those from companies leading in research and production, like NVIDIA, Microsoft, and Alphabet. They develop and power the infrastructure that drives modern artificial intelligence.