How LLM Retriever Can Modernize Your Data Systems

Every business faces the challenge of handling vast amounts of data. LLM retrievers can turn this challenge into a significant advantage.

Unlike traditional search tools, LLM retrievers combine keyword and vector searches to retrieve relevant documents. They match exact terms through keyword searches and understand the context using vectors, ensuring the most relevant documents are retrieved.

Using LLM retrievers, your team spends less time digging through data and more time making wise decisions. These tools get the context and nuances of your queries to ensure you get the most relevant and helpful information.

Plus, as your business grows and your data needs increase, they can scale seamlessly to handle the load and keep everything running smoothly.

In this article, we'll explore what LLM retrievers are and understand the practical aspects of using this innovative technology in your business.

How LLM Retrievers Are Improving Data Search#

Large Language Models (LLMs) have made significant progress. At first, they could only handle simple text and small amounts of data. They were helpful but had clear limits.

As technology improved, researchers created better models. These new models could process more data and understand more complex text. They used advanced techniques to make sense of bigger ideas.

Next came LLM retrievers. These systems combined LLMs with search technology to find helpful information from large datasets. They used keyword searches to match exact terms and vector searches to understand the context of the text.

The latest improvements included machine learning models to sort search results. This means the system first finds relevant information and shows the most valuable results. This combination of keyword, vector, and re-ranking greatly improved LLM retrievers.

Today, LLM retrievers are used in many fields. In healthcare, they quickly find relevant research. In education, they provide accurate information to students. Businesses use them for market analysis and customer service.

The growth of LLM retrievers shows how they have evolved from basic models to advanced systems that use multiple technologies. These advancements make information retrieval faster, more accurate, and more useful across different industries.

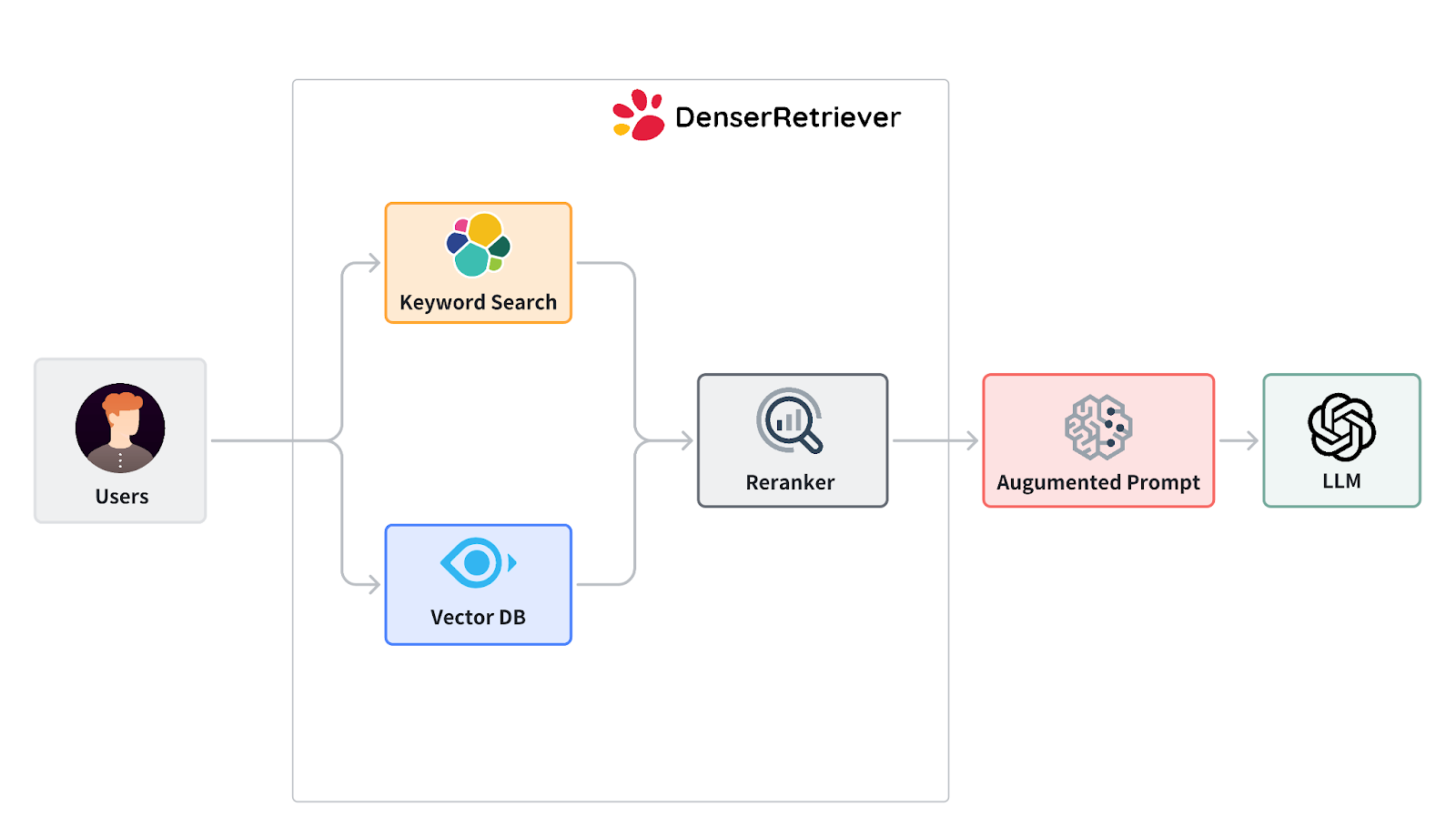

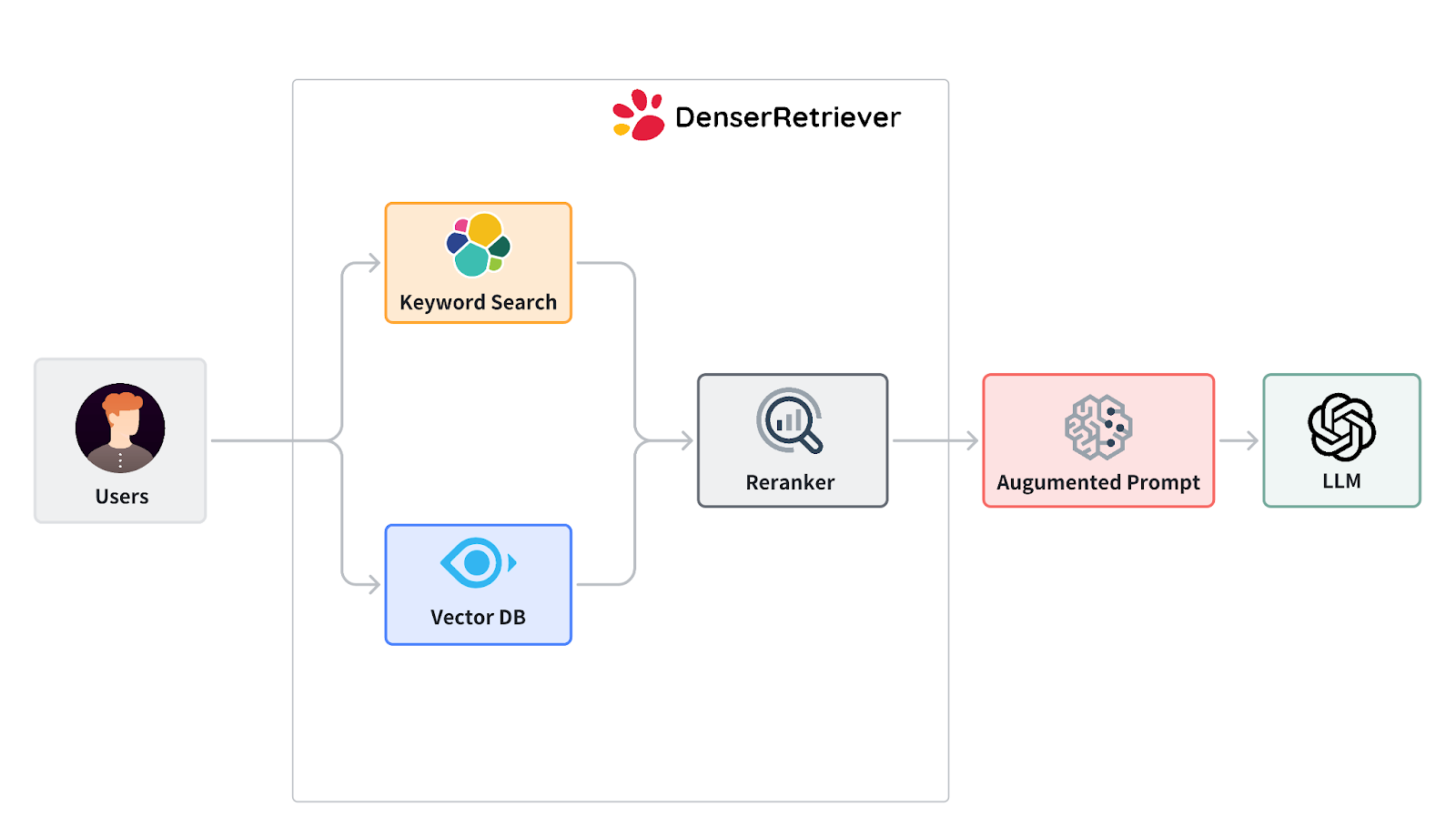

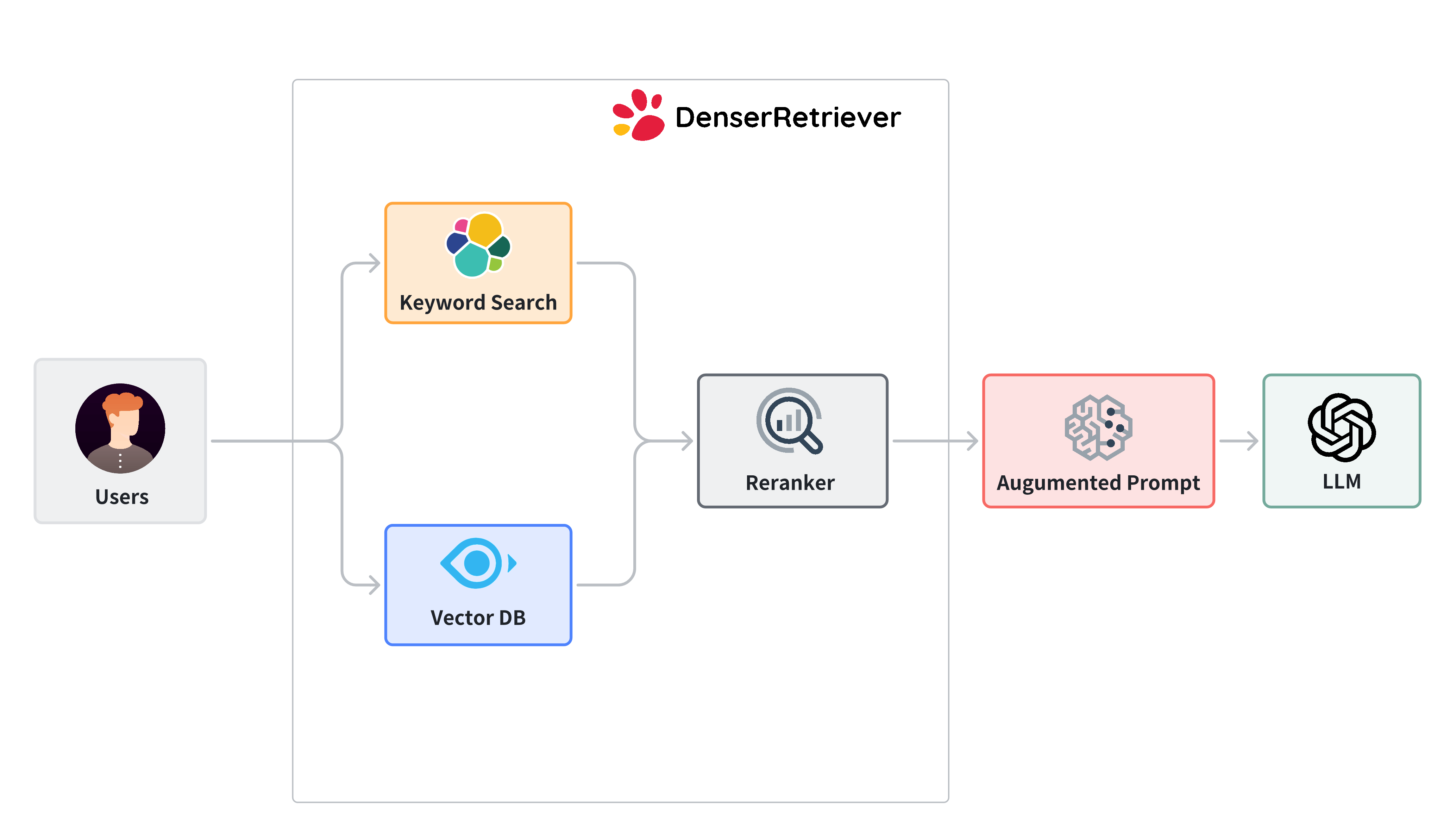

How LLM Retriever Works#

An LLM retriever uses a combination of technologies to find information. Let's break down the process:

- Input: You provide the documentation or data you want to search.

- Keyword search: The system looks for exact word matches in the data.

- Vector search: It also converts text into vectors. These vectors help understand the context beyond exact words.

- Retrieval Augmented Generation (RAG): Retrieves relevant documents from a database and generates responses based on the information in those documents.

- Results integration: It merges findings from both searches to ensure comprehensive results.

- Re-ranking: A machine learning model then re-ranks these results to prioritize relevance.

- Output: Finally, the system presents the sorted results.

The system might include a feedback loop where users interact with the search results in more advanced setups. This includes clicking on a link or reading a document to further train and refine the models. This ongoing learning process helps improve the accuracy and relevance of future searches.

Applications of LLM Retrievers in Various Industries#

LLM retrievers are changing the way different industries find and use information. Here's how they're being used in various sectors:

Healthcare#

Doctors use LLM retrievers to quickly access medical research. This helps diagnose and create treatment plans. They also help in drug discovery by sifting through vast databases of chemical compounds.

Legal Industry#

Law firms employ these systems to search through case law and legal precedents. This speeds up legal research, helping lawyers prepare for cases more efficiently.

Finance#

In finance, LLM retrievers analyze market data and reports. They help predict market trends and make investment decisions.

Education#

Educators and students use these tools to find academic papers and resources. This supports learning and research initiatives.

Customer Service#

Companies integrate LLM Retrievers into chatbots and support systems. Sales chatbots quickly answer customer inquiries, improving response times and satisfaction.

Media and Entertainment#

These retrievers help journalists and content creators research topics quickly in the media. They gather background information, check facts, and explore related stories.

Retail & Ecommerce#

Retailers use them to manage and analyze customer reviews and feedback. This helps understand consumer needs and improves service.

Search functionality is critical in e-commerce. LLM retrievers improve search engines on retail websites by understanding natural language queries and delivering more relevant search results.

Introducing Denser Retriever#

The Denser Retriever is designed for rapid deployment and versatility. It simplifies installation through a simple Docker Compose setup, allowing users to get up and running quickly. Beyond the initial setup, it offers a self-host option suitable for scaling up to enterprise-level production environments.

Moreover, the Denser Retriever excels in accuracy and efficiency. It is rigorously tested against the MTEB retrieval dataset, ensuring it delivers top-notch performance. This tool doesn't just facilitate ease of use; it sets a new standard in retrieval accuracy.

For detailed instructions on how to install Denser Retriever, build a retriever index from a text document or website page, and how to perform queries on such an index, you can check out the official documentation and resources below:

- Denser Retriever Documentation: Visit documentation

- Denser Retriever Repository: Explore the repository for more insights and tools.

Benefits of Implementing Denser Retriever#

Implementing Denser Retriever in your systems comes with a host of advantages that cater to a wide range of operational and technical needs:

Open Source Advantage#

As an open-source tool, this tool offers complete transparency in its operations and codebase. It builds ongoing improvements and innovations contributed by a global community of developers.

Production-Ready Stability#

Denser Retriever is crafted to be deployment-ready for production environments. This means it provides the robustness, stability, and reliability needed for real-world applications. It also helps reduce the time and resources spent on development and troubleshooting.

Cutting-Edge Accuracy#

With its state-of-the-art accuracy, this tool improves the precision of AI-driven applications. This level of accuracy ensures that businesses and researchers can trust the outputs, leading to better decision-making and improved outcomes.

Scalable Performance#

Denser Retriever is designed to scale to meet your business needs. Whether your data grows or the number of users increases, it adapts seamlessly. It ensures that it can handle varying loads efficiently, suitable for businesses of all sizes.

Versatile and Adaptable#

The tool's flexibility makes it a valuable asset across various industries. It can be customized to meet specific needs, such as in-depth analytical tasks, customer service improvements, or complex academic research.

Get Faster, Smarter Data Retrieval with Denser Retriever!#

Ready to transform your data management? Denser Retriever can give you the competitive edge you need!

Set up Denser Retriever in just minutes with a simple Docker Compose command. Take advantage of our self-host solution for enterprise-level deployment.

Deploy now or contact us to transform your data handling with Denser Retriever's advanced solutions!

FAQs About LLM Retrievers#

What is a vector store?#

In LLM retrievers, the vector store plays a crucial role by storing these vector embeddings. LLM uses it to perform tasks like searching, retrieving, and ranking relevant documents based on the semantic similarity between the query and the content in the database.

How does a context window work in language models?#

A context window refers to the text portion a language model looks at to understand and generate responses. It defines how much surrounding text the model considers to maintain the coherence and relevance of the information it processes.

How quickly can an LLM retriever process a given query?#

The processing speed of your custom retriever can vary depending on the complexity of the query and the size of the data set. However, these systems are designed to provide real-time or near real-time responses, thanks to efficient algorithms and powerful computational resources.