How Chatbot Feedback Improves Chatbot Performance

A chatbot is only as good as the information it provides. Users might drop off, ask the same questions repeatedly, or get frustrated when responses don’t meet their expectations.

The real challenge isn’t just the chatbot performance but how you collect, analyze, and apply chatbot feedback to improve interactions.

If you’re only gathering surface-level feedback, you’re overlooking key insights that could drive better engagement and improve customer experience.

Simply launching a chatbot isn’t enough—you need to refine it continuously based on real user interactions.

This guide will show you how to use chatbot feedback better and create a chatbot that is smarter, faster, and more aligned with user needs.

You’ll also see how Denser.ai provides AI-driven insights that help you analyze chatbot interactions in real time, turning chatbot failures into growth opportunities.

Why Basic Feedback Fails#

One of the biggest challenges you may face is identifying where your chatbot is underperforming. Users may abandon conversations when they don’t get helpful answers. But without a way to gather feedback, you won’t know what went wrong.

If you collect customer feedback through star ratings, thumbs-up/down reactions, or optional surveys, you’re missing valuable insights. These methods might give a general idea of user satisfaction, but they fail to explain why a chatbot response was helpful or frustrating.

Your chatbot must rely on a strong chatbot’s knowledge base, which needs regular updates to remain accurate and relevant. However, without a structured way to collect feedback, it’s nearly impossible to pinpoint where your chatbot is falling short.

Basic rating systems and occasional reviews won't be enough if your chatbot frequently misunderstands customer inquiries or provides incomplete responses.

You need a real-time feedback system that allows you to track recurring issues, analyze user sentiment, and update your chatbot’s knowledge base to improve response accuracy.

A Smarter Way to Collect Chatbot Feedback#

Rather than depending on what users say, you should analyze how they interact with your chatbot. Behavior-based feedback reveals what users won’t always say outright.

If users are abandoning conversations, asking the same question multiple times, or frequently requesting human assistance, your chatbot needs improvement.

To find recurring issues, track:

- Conversation drop-off points: Where users exit without completing a chat

- Repeated questions: Signs that answers are unclear or unhelpful

- User sentiment: AI-driven analysis of frustration, confusion, or satisfaction

- Escalations to human agents: Reveals chatbot weaknesses that need fixing

Basic chatbot analytics don’t always reveal why users struggle with chatbot interactions. Denser.ai provides real-time analysis of chatbot performance, helping you pinpoint weak areas and improve responses faster.

Sign up for a free trial or schedule a demo to see how AI-driven feedback analysis can transform your chatbot interactions!

How to Use Feedback to Improve Performance#

Chatbot feedback is an opportunity to create better conversations, improve customer satisfaction, and drive business growth. A chatbot that consistently provides helpful responses will increase customer engagement and reduce reliance on human agents.

It’s important to move beyond collecting data and take actionable steps to improve chatbot performance and make the most of chatbot feedback. When feedback is applied correctly, chatbots can provide faster, more accurate responses.

Here’s how you can use chatbot feedback to improve performance:

Turn Feedback Into Actionable Insights#

Businesses that successfully collect user feedback can improve chatbot interactions and increase retention.

A chatbot consistently delivering relevant responses reduces the need for human support. However, raw feedback alone is not enough. It must be analyzed and applied strategically.

If users frequently ask for delivery timelines but still contact support for clarification, it’s a sign that the chatbot’s responses aren’t detailed enough.

Similarly, if chatbot conversations frequently end without resolution, it may indicate that the chatbot needs better training or additional information.

Refine Responses Based on User Behavior#

Users may not always leave direct feedback, but their behavior tells a story. If certain chatbot responses lead to repeated queries, it’s a sign that the information provided is unclear.

High escalation rates—where users request human support—often indicate that the chatbot is failing to meet expectations.

Refining feedback chatbot responses should involve the following:

- Adjusting responses to better match user intent

- Providing more relevant details instead of generic replies

- Improve the chatbot’s ability to process natural language processing to understand variations in phrasing

If users frequently ask, "Do you have a return policy?" and the chatbot simply replies, "Yes, we have a return policy," without linking to relevant information, this response adds no value. A better response would be:

"Yes, we accept returns within 30 days. Would you like to see the full policy?"

Don't Overcomplicate Chatbot Interactions#

A chatbot may have the right answers, but if it takes too long to deliver them or asks unnecessary questions, users will get frustrated.

Shortening response times and improving conversation flow can help create a user-friendly interface that encourages engagement.

Some ways to reduce this include removing unnecessary steps. If a chatbot asks, "Would you like to see shipping rates?" before displaying them, it’s an extra step that can be skipped.

If a user types the wrong order number, a chatbot should also allow them to re-enter it instead of restarting the conversation.

You can provide quick action buttons. Instead of forcing users to type responses, offering predefined options can speed up interactions.

Measure Improvements Through Testing#

Once chatbot responses are refined, it’s important to measure their impact. If a chatbot previously had a high drop-off rate but now retains users for longer conversations, this indicates an improvement.

You can measure improvements through A/B testing different chatbot responses to see which version performs better and track sentiment changes in user conversations. If chatbot feedback previously showed frustration and now contains more positive responses, changes are working.

You also have to analyze before-and-after performance metrics such as response accuracy, resolution time, and escalation rates.

How Denser.ai Automates Chatbot Feedback Optimization#

Manually optimizing a chatbot can be overwhelming, especially as conversations grow in volume. If left unmonitored, chatbots can start providing outdated or ineffective responses.

AI chatbot solutions like Denser.ai eliminate these challenges by automating chatbot optimization. It tracks user interactions, analyzes feedback in real time, and fine-tunes chatbot responses without manual effort.

Real-Time Query Tracking#

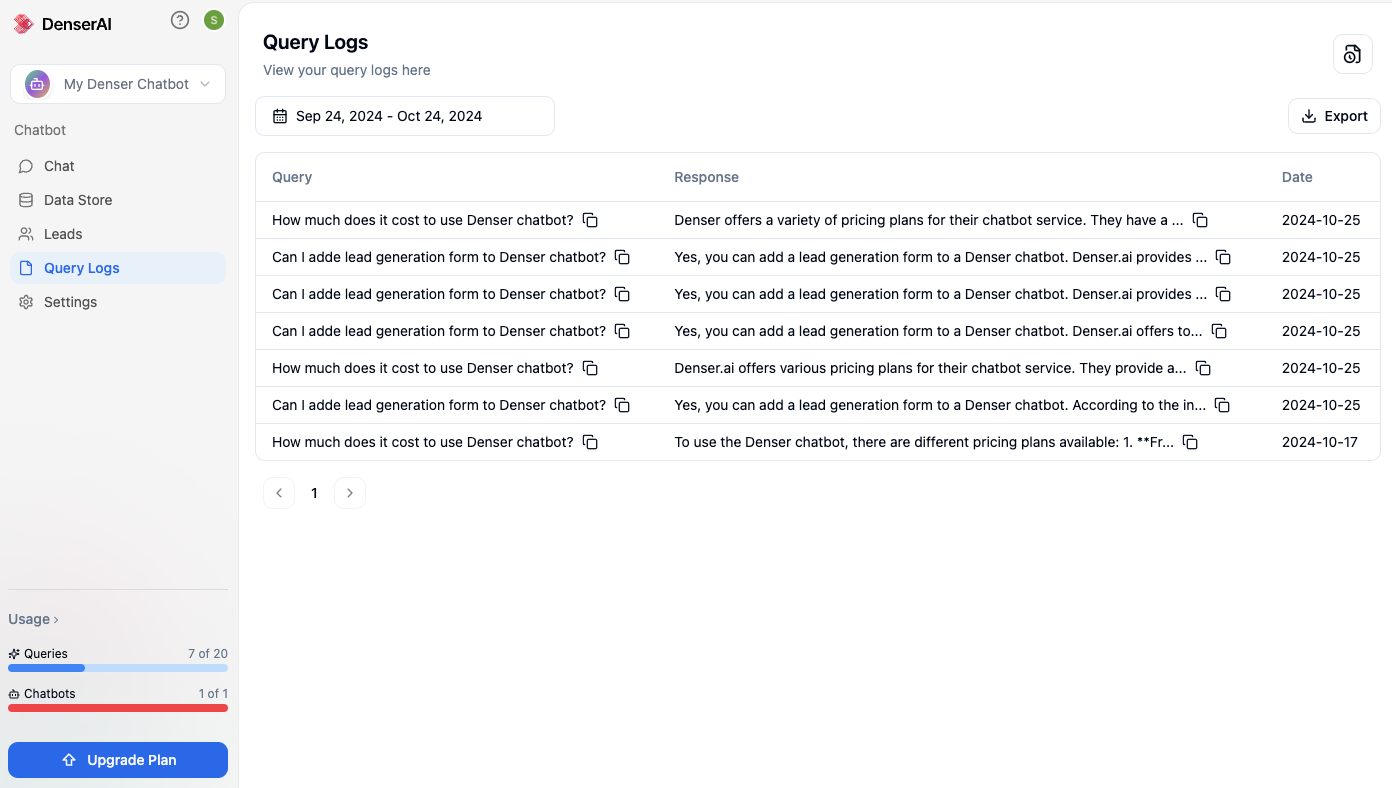

Monitoring chatbot feedback requires access to detailed query logs that record customer questions and chatbot responses.

Denser.ai provides an intuitive query-tracking system. You can review past interactions, identify patterns in user frustrations, and refine chatbot responses based on real conversations.

To access chatbot query logs:

- Open the chatbot management page.

- Select the "Query Logs" section from the side panel.

- View chat records listed in chronological order, with the most recent queries displayed first.

Query Log Retention & Data Management#

As chatbots process thousands of user interactions, storing all chatbot feedback becomes impractical.

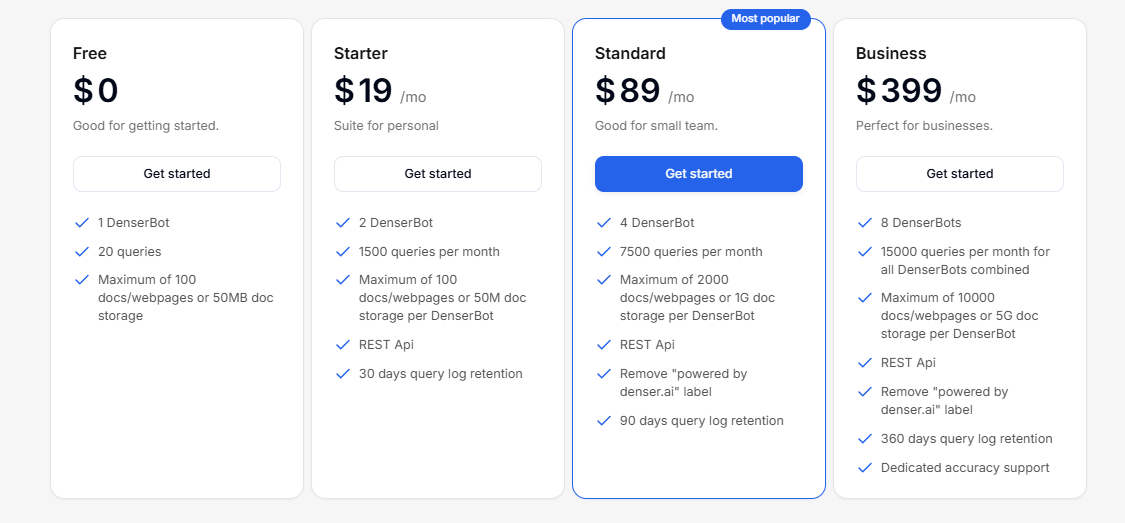

Denser.ai manages this by automatically retaining logs based on the user’s subscription tier.

Businesses using the free trial can access up to 20 chatbot queries without deletion.

Starter-tier users have logs stored for 30 days, while Standard and Business tiers retain chatbot feedback for 90 and 360 days, respectively.

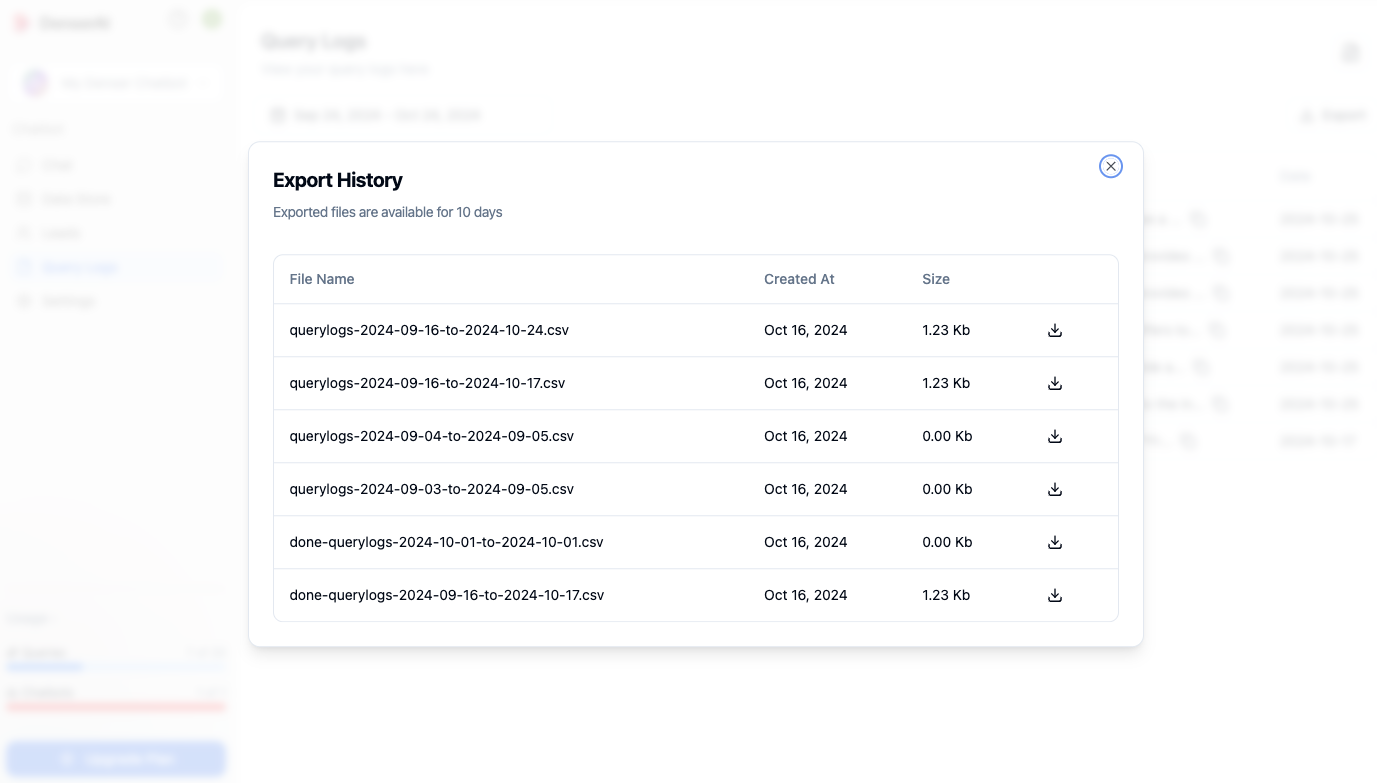

Exporting Query Logs as CSV#

Denser.ai provides an export feature that enables you to download chatbot feedback records in CSV format. This is useful for long-term evaluation of chatbot interactions, training AI models with real user conversations, and auditing chatbot performance over time.

To export chatbot feedback, users select a date range, initiate the export, and download the file once processing is complete.

The exported CSV files remain available for ten days before automatic deletion, making sure chatbot owners can retrieve and store feedback data for further optimization.

AI-Driven Chatbot Optimization#

Denser.ai refines chatbot responses automatically using AI-driven learning. It detects patterns in user interactions and continuously adjusts chatbot answers to align with user needs.

The AI recognizes repeated user queries and modifies responses accordingly. It also analyzes sentiment to detect frustration and refine chatbot wording for a smoother experience.

Conversation flow is optimized by reducing unnecessary back-and-forth exchanges that prevent user frustration and abandonment.

If a chatbot frequently receives the same unresolved question, Denser.ai updates its responses automatically to reduce the need for manual intervention.

Continuous Performance Monitoring & Adjustments#

Denser.ai constantly tracks key chatbot analytics to maintain an efficient and user-friendly experience. The system monitors drop-off rates by identifying where users exit chatbot conversations without resolution.

Escalation rates are analyzed to determine how often users request a human agent, indicating chatbot limitations. Resolution success is measured to assess how effectively chatbot responses satisfy user queries.

Denser.ai removes the complexity of chatbot optimization by offering automated feedback analysis, AI-powered learning, and real-time chatbot adjustments.

Turn Raw Chatbot Data Into Actionable Improvements With Denser.ai#

Basic ratings and surveys don’t provide enough detail to improve chatbot accuracy. You need a smarter way to collect feedback and turn it into actionable improvements.

With Denser.ai, you can go beyond basic analytics and understand the full picture of chatbot performance. You can track drop-off points, sentiment trends, and repeated queries to uncover what’s frustrating users.

Rather than manually sifting through logs, Denser.ai automates chatbot optimization by learning from past interactions and refining responses instantly.

Take control of your chatbot’s performance and start making data-driven improvements today. Sign up for a free trial or schedule a demo now!

FAQs About Chatbot Feedback#

What is the feedback mechanism of a chatbot?#

Feedback can come in different forms, including:

- Users provide feedback through direct ratings, reviews, or written comments about their chatbot experience.

- The chatbot analyzes user behavior, such as message drop-offs, repeated queries, or escalation to a human agent, to detect issues.

- AI-driven tools analyze text to determine if the user is satisfied, neutral, or frustrated.

A strong chatbot feedback mechanism uses a combination of these methods to identify areas for improvement and refine responses over time. Using messaging platforms like Facebook Messenger can easily collect feedback from users in real time.

But AI platforms like Denser.ai automate this process by continuously analyzing feedback and optimizing chatbot conversations in real-time.

What is an example of a chatbot message?#

A chatbot message varies based on its purpose. Below are examples of chatbot responses in different scenarios:

- Customer support response: **"Hi! I see you’re asking about your order status. Let me check that for you. Could you please provide your order number?"

- Sales inquiry response: **"Looking for the best product for your needs? Based on your previous searches, I’d recommend [Product Name]. Would you like to see its features or customer reviews?"

A well-designed chatbot response should be clear, direct, and context-aware. If users frequently ask for clarification, it’s a sign that the chatbot’s responses may need improvement.

How to evaluate chatbot responses?#

Evaluating chatbot responses involves analyzing accuracy, relevance, engagement, and sentiment.

AI-powered platforms like Denser.ai help you evaluate chatbot responses by tracking user sentiment, monitoring unresolved queries, and automatically adjusting chatbot answers based on past interactions.

Can chatbots learn from feedback?#

AI-driven chatbots can learn from feedback and improve over time. Machine learning enables chatbots to analyze past conversations, detect errors, and refine their responses.